Unfair advantage? How OEMs get ahead with Custom Compute

Intro Original Equipment Manufacturers (OEMs) constantly face the challenge of delivering cutting-edge products that meet the evolving demands of their customers. In the world of technology, where innovation is the key to staying ahead, the choice of computing solutions plays a pivotal role in shaping the success of OEMs. In the past OEMs have relied […]

Codasip 700 RISC-V processor family: Bringing the world of Custom Compute to everyone

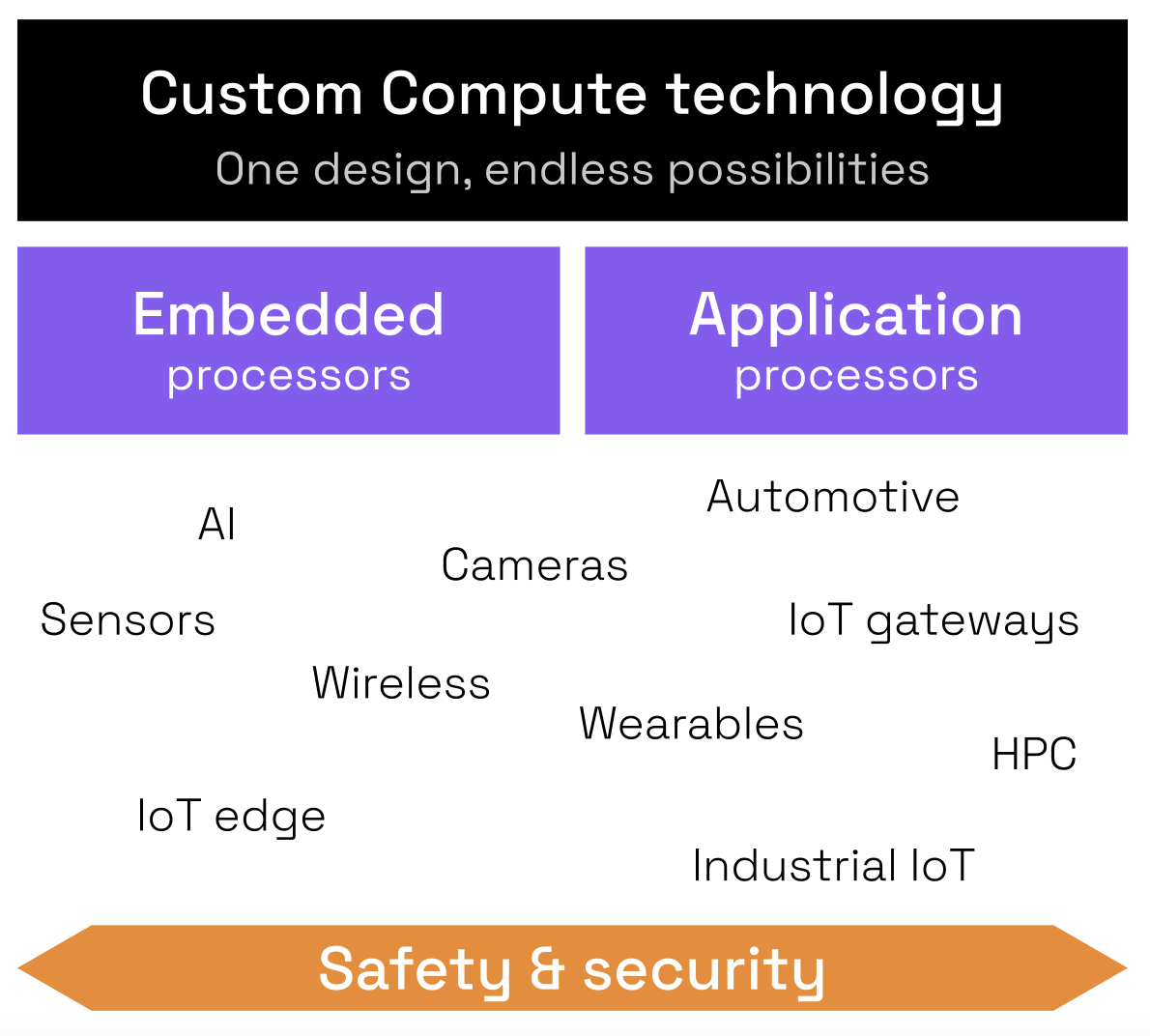

Today, technology innovators must have new ways to create differentiated products. How are they supposed to meet the demand for more computational performance when semiconductor scaling laws are showing their limits? There is only one way: having a compute that is custom for specific needs. And what do we need for that? Several aspects: Architecture optimization, […]

What you should ask for instead of just PPA

SoC designers would really like to be able to compare PPA numbers of digital IP. However, as explained in our previous article, this is mostly impossible as available numbers usually do not apply to your use case. It is easy to be misled by PPA. So now, how do you select the best digital IP? […]

Why you should stop asking for PPA

As a topic that is subject to intense discussions, PPA (Power, Performance & Area) is certainly something that everyone in the semiconductor industry is focusing on. Who wouldn’t want a smaller and cheaper circuit that would consume less power and be much more capable than the previous generation? But you should stop asking for PPA. Or at […]

Creating specialized architectures with design automation

With semiconductor scaling slowing down if not failing, SoC designers are challenged to find ways of meeting the demand for greater computational performance. In their 2018 Turing lecture, Hennessey & Patterson pointed out that new methods are needed to work around failing scaling and predicted ‘A Golden Age for Computer Architecture’. A key approach in addressing this challenge […]

Domain-Specific Accelerators

For about fifty years, IC designers have been relying on different types of semiconductor scaling to achieve gains in performance. How do they do it when Moore’s Law and Dennard Scaling have been broken?

What is an ASIP?

ASIP stands for “application-specific instruction-set processor” and simply means a processor which has been designed to be optimal for a particular application or domain. So what exactly is the difference from a general-purpose processor?

What is CodAL?

CodAL is central to developing a processor core using Codasip Studio. It is a C-based language developed from the outset to describe all aspects of a processor including both the instruction set architecture (ISA) and microarchitecture.

How to Choose an Architecture for a Domain-Specific Processor

If you are going to create a domain-specific processor, one of the key activities is to choose an instruction set that matches your software needs. So where do you start?