The Chinese AI company DeepSeek took the technology industry, and Wall Street, by storm with its language model achieving a reported 10x higher efficiency than AI industry leaders. You have seen the news and might be getting sick of the endless articles tagging onto it, but I would like to offer a different perspective. DeepSeek claimed it used a cluster of 2,048 Nvidia H800 GPUs (as stated in their technical report). If this is true, they are using a lot less computing power than other leading AI players. That news hurt Nvidia’s stock price badly, as the implication is this reduces the need for compute… But is that really the case? Is this as obvious as it seems? And why haven’t some other tech companies reacted like the markets did?

As the dust settles on all the media around DeepSeek, surely there are plenty of lessons to be learned. One is that DeepSeek built and trained their models on top of already open-source models making use of investments already made by others. All that past spent compute can never be retrospectively restricted in the space of available open global trained models.

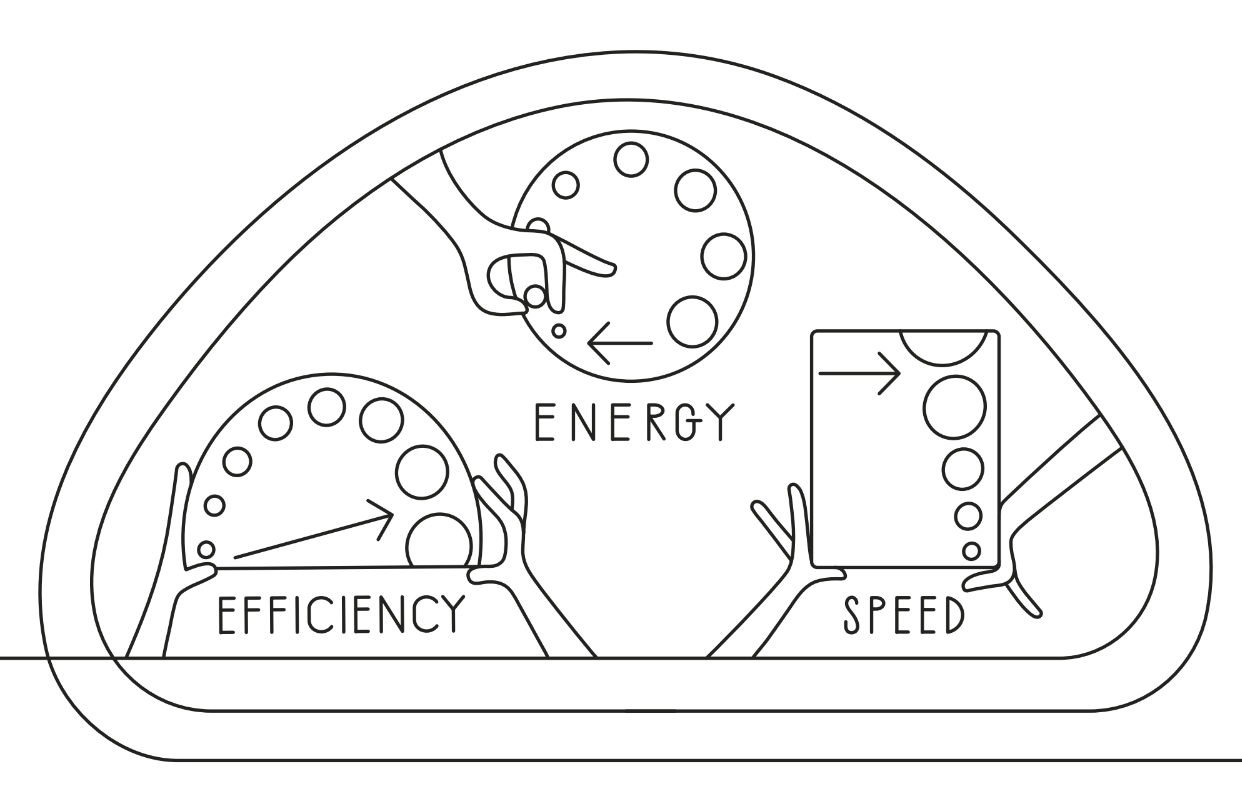

The fact that DeepSeek was able to achieve a similar performance as leading AI players with less hardware resources has given rise to a discussion comparing compute needs. If you can achieve this performance with less hardware resources and open-source models, do you even need more computing power? Well, even using model distillation, having access to limited compute resources required DeepSeek to heavily optimize their software (again, in their technical report).

According to DeepSeek, they used various techniques to optimize software to the limited hardware they had access to, helping them achieve these performance gains with less computing power. Of course, nothing comes for free and tailoring software to hardware typically reduces flexibility. As with everything else in life, you need to find the right balance.

Optimizing software for hardware to boost efficiency

DeepSeek has demonstrated how innovation can take a leap forward when hardware and software are considered together, ensuring that every component is fully utilized to its maximum potential. The fact is; by optimizing software for hardware, you can significantly increase efficiency.

So, what should others do to stay ahead? The first good news is that everyone can now build upon DeepSeek’s open models. We can expect other AI players to regain their competitive edge within the next few months, likely making use of the open step forward made by DeepSeek.

However, and possibly the most poignant and missed point of this story, is that even with these software optimizations there was never really a drop in demand for hardware compute! The demand for more computing power, and for more efficient computing, remains insatiable. This perceived drop in demand will be consumed tenfold tomorrow or the day after with the next unknown step made on this AI road. Unlike the limited commodities of gold or bitcoin, computing power is in theory unlimited. In theory, because in practice, hardware can feel limited given Moore’s law ending, Dennard scaling failing or simply, as this story shows, limited suppliers of the perceived type of compute needed (GPUs).

But imagine the possibilities if you were free from those limits? And you can own and optimize your hardware alongside your software – this would unlock even greater potential!

Co-optimizing hardware and software

At Codasip, we are championing Custom Compute. Custom Compute enables this ownership and lets innovators co-optimize hardware and software performance for the unique workload and operational requirements of their products. Unlike off-the-shelf processors, or GPUs in this case, which offer a generic, one-size-fits-all approach, here at Codasip, we offer the flexibility to tailor hardware to meet the specific needs of the software application and understand the impact of the software on the hardware. Our Custom Compute offering covers a range from very small embedded applications to very large high-performance computing.

Finally, while we are on the topic of GRAPHICS Processing Units, have you ever wondered about the dead silicon inside them? Just waiting to put a pixel on a screen, taking precious power and expensive die area while crunching those invisible neural network tasks. Well, you have just identified your first optimization—removing those might be nice! Now you will just have to wait in line until Nvidia tells you that it’s ok for them to do that, with the next trillion of your money. …Or do you?

Customized processors are the next evolutionary stage and should be available for companies of all sizes. We are here for it.