In the previous blog post we looked at the ruinous costs of allowing unsafe memory access. However, there are generally existing protection mechanisms that are designed into processors. Can these be relied upon to give adequate protection? We will look at some common mechanisms and consider how effective they are at dealing with common memory-related vulnerabilities.

What is processor integrity?

When we think of integrity problems with processors, we generally think of malicious code injection. For example, loading malware during a boot, installing malware during a firmware update, or importing malicious code from an app or website.

However, not all integrity issues have malicious causes. Corruption of data and program crashes can be the direct result of how software is written. Similarly, not every program should be able to access every processor resource except in the case of simple bare-metal embedded applications.

Various methods are commonly used to address processor integrity, including:

- Chain of trust

- Privilege modes

- Memory protection

- Execution enclaves.

Let’s now briefly consider each of these.

Chain of trust

A chain of trust is associated with starting a processor system. It is essential that from the outset the processor only executes trusted code.

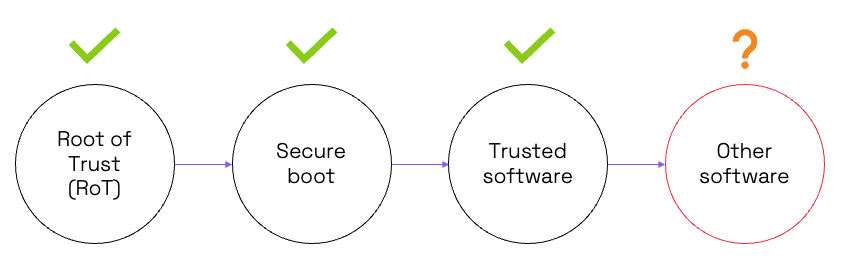

A chain of trust establishes a sequence of loading successively more complex software elements. The loading of a new element is conditional on the earlier steps being authenticated. The starting point is a hardware root of trust (RoT). This is the anchor point for the whole chain. The RoT will typically contain cryptographic keys, or the ability to generate keys and the authentication algorithm for the next stage in the chain. The RoT is based on immutable hardware such as a ROM or one-time programmable (OTP) memory. Keys are either stored there too, or generated by means of a physically unclonable function (PUF).

Chains of trust vary in complexity but in this very simple example, the next stage would be to bring up the secure boot loader. The loader would be checked for trustworthiness by an authentication algorithm such as a cryptographic signing function. In turn the bootloader would install firmware and check that it was trusted. The firmware might be a real-time operating system (RTOS) such as FreeRTOS or a rich operating system (OS) such as Linux.

Figure 1: Simple example of a chain of trust. Source: Codasip.

Finally, applications and other software can be run. Depending on the type of system, this software may be trusted by the user and/or be from a third party with an unknown trust level.

Privilege modes

Not all software is equal and some need to have more rights to access processor resources than others. Generally speaking, it is not good for applications to have direct access to memory, files, ports, and other hardware resources. Safe access to hardware requires dedicated drivers.

Privilege modes in RISC-V

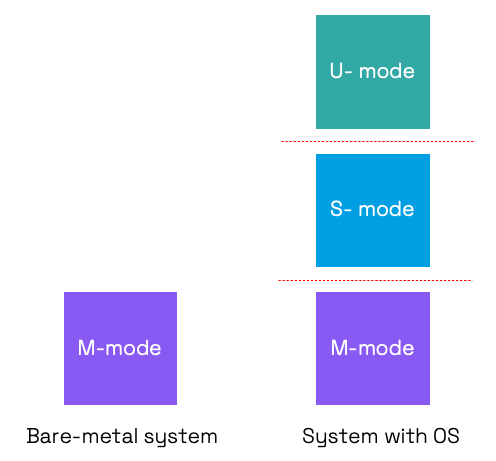

Normally a CPU has privilege modes defined to protect CPU resources from dangerous usage. In RISC-V, three privilege modes are defined in increasing order of control: user-mode (U-mode), supervisor-mode (S-mode) and machine-mode (M-mode).

The modes are used as follows:

- U-mode: for user applications

- S-mode: for the OS kernel including drivers (and hypervisor)

- M-mode: for the bootloader and firmware.

Figure 2: Privilege modes in RISC-V. Source: Codasip.

In RISC-V, machine mode is the most privileged mode with full access to hardware. A bare-metal embedded application and some RTOSs run in machine mode only. This approach can work for simple systems. Some embedded applications may combine user- and machine-modes. However, a processor running a rich OS has all three modes in use and has a much more complex set of software running.

Privilege modes help define access to CPU resources reducing the opportunity to abuse them through malware or buggy programming. They can be regarded as providing a vertical separation between modes.

However, privilege modes do not distinguish between the trustworthiness of different applications in the way that execution enclaves can.

Memory protection

Given that software tasks need to be protected from buggy or malicious behavior of others, memory protection schemes have been devised to protect individual tasks.

For example, the RISC-V privileged architecture provides for defining multiple physical memory regions. Physical memory access privileges (read, write, execute) can be specified for each region by means of machine-mode control registers.

This type of memory protection can be used to separate memory into enclaves or execution environments to separate secure tasks from unsecure ones. This would be implemented in hardware using a memory protection unit (MPU).

A memory management unit (MMU) also offers memory protection by organizing the memory into pages of a fixed size. This not only can support virtual memory but means that pages are isolated from each other limiting the harm that one process can inflict on another.

Neither an MPU nor an MMU on their own can distinguish between the trust level of the processes that are running. However, defining execution enclaves can distinguish between trusted and non-trusted processes.

Execution enclaves

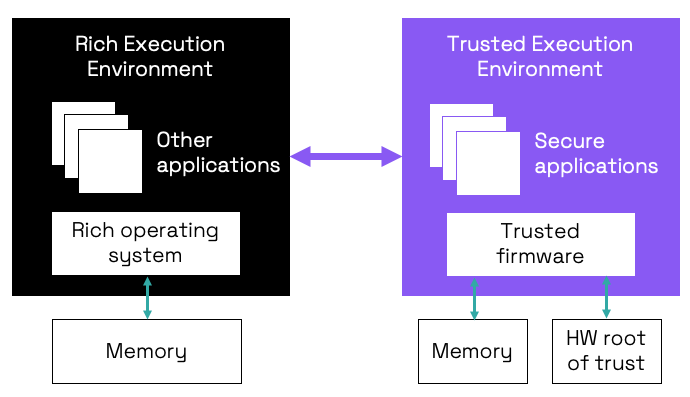

The best-known example of separate execution enclaves is the binary separation into trusted execution environment (TEE) and rich execution environment (REE) as promoted in the mobile phone world.

Figure 3: Simplified representation of binary enclaves. Source: Codasip.

Memory protection is used to ensure that trusted firmware and secure applications have their own memory area and other hardware resources. Together these hardware and software elements constitute the TEE. A rich OS (such as Android) and non-secure applications have access to a separate memory area forming the rich execution environment. If a software in the REE area needs to communicate with the secure TEE area, then a well-defined interface is provided.

This separation can be regarded as a horizontal one in contrast to the vertical separation of privilege modes. More sophisticated schemes have been proposed using more than two enclaves, but the principle of horizontal separation still applies.

Is this sufficient for ensuring processor integrity?

We have looked at commonly used approaches to ensuring processor integrity. Each of them provides a degree of protection but also some limitations.

With a chain of trust anchored in hardware the processor starts safely, providing all the components in the chain are trusted. In open systems such as a mobile phone, the SoC has no control over what apps a user installs so only the boot of the phone is assured. More generally, companies developing software for SoCs do not normally have control over all code used with open-source and 3rd party libraries widely used.

Vertical separation provides protection against unauthorized use of some hardware resources. Horizontal separation ensures that memory needed for secure applications and firmware is not accessed by untrusted applications in an REE. However, both approaches are coarse-grained and still leave vulnerabilities such as buffer overflow or over-reads possible.

In the next episode, we will explore the root causes and risks of buffer bounds vulnerabilities.