What is memory protection granularity?

Memory protection granularity defines the smallest unit of a protected memory area, ranging from coarse-grained memory regions to fine-grained objects or even individual bytes.

The choice of memory protection granularity depends on the design and security objectives of the system.

Processor privilege levels, or modes, are the most common form of memory protection. The most privileged mode (for example, RISC-V’s machine mode) is inherently trusted as it has full access to the processor’s memory and resources. Software running in this mode defines the memory access rules for less privileged modes. Memory protection is typically enforced by hardware components such as Memory Management Units (MMUs) or Memory Protection Units (MPUs) and varies in granularity.

Memory protection granularity variants

MMUs protect individual pages with a typical granularity of 4KB. Page-based memory protection allows for an efficient use of virtual memory and is common in many high-end systems, including servers, desktops, and mobile devices. As a result of their hardware complexity, memory requirements, and non-deterministic timing, MMUs are unsuitable for embedded devices with resource constraints and real-time requirements. In such systems, MPU-based protection is employed instead.

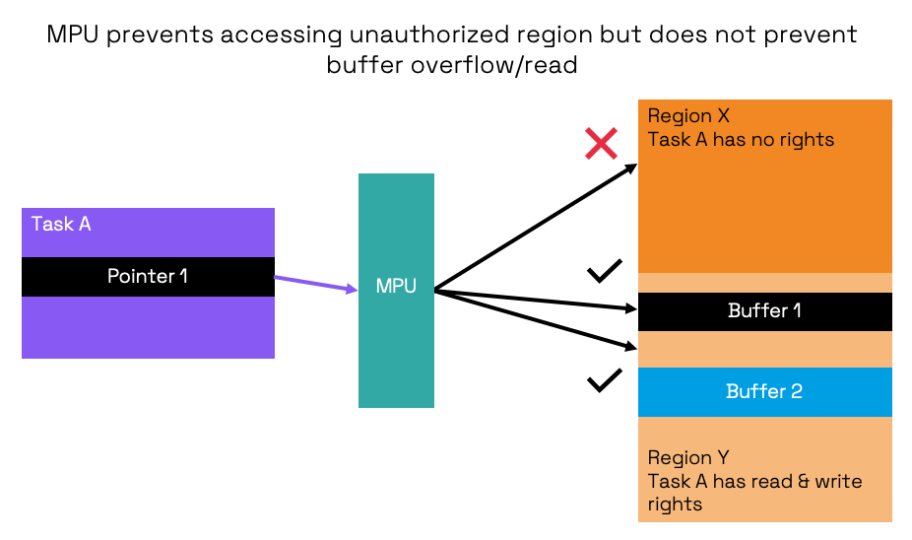

MPUs protect memory regions. Theoretically, MPU regions have more flexible granularity than fix-sized MMU pages, even down to a single word. In practice, however, due to their constraints, embedded devices benefit from MPU regions that are coarse-grained and limited in number (e.g., usually between 8 and 16 regions).

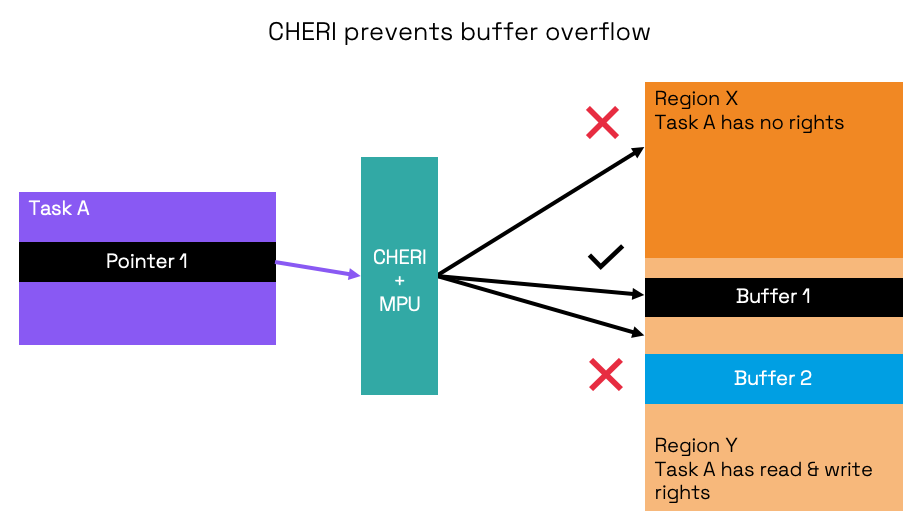

MMU and MPU-based memory protection fall short in providing fine granularity to effectively counteract memory safety vulnerabilities such as buffer overflows. Heartbleed is a well-known vulnerability caused by a buffer overflow, allowing attackers to steal confidential server information. A fine-grained memory protection mechanism is needed to detect localized memory violations within a program, such as ensuring that pointers are exclusively used within valid boundaries.