If you are joining me now in this formal verification blog series, welcome! In episode 1, we looked at best practices to set up formal verification on a specific component. In episode 2, we focused on the verification of a deadlock bug. In episode 3, we spent time setting up the formal testbench for end-to-end properties. In this episode, let’s focus on data corruption.

End-to-end assertions for data checking

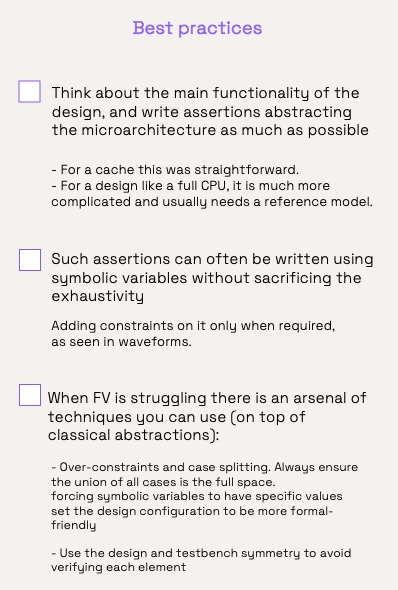

We are now almost ready to verify two new major assertions (and their derivatives). Without knowing anything about the design microarchitecture, we can still write these assertions.

- After a write request to an address A, with a data D, the following read request to A will return D. This must be true regardless of each request hitting or missing in the cache. The line holding A may even be evicted between the two requests.

- Two consecutive read requests to the same address return the same data.

Interestingly, at that point a new bug was found in the design by simulation, caused by the fix for the deadlock discussed in the second episode. It was not found by FV so far, because it is a data corruption bug while our formal testbench was only able to catch control bugs. So let’s fix that.

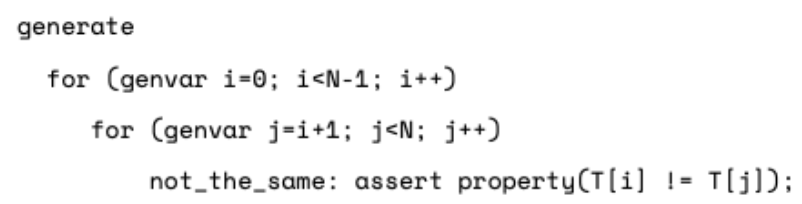

We started by writing these two assertions using a well-known FV technique called “symbolic variables” (sometimes called “free”, “undeterministic”, or “oracles”). It consists in verifying only one element, without specifying which one. With FV, it means exhaustively verifying all elements. For example, when checking that all cells in an array have different values, you can use two nested loops:

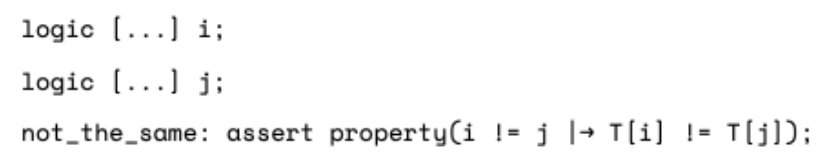

Using oracles, that would be:

The difference between these two forms is that in the first one, you have many assertions to prove, while in the second one there’s only one assertion… which is more complex! Note that the properties using oracles cannot be constraints, and they cannot be used in simulation.

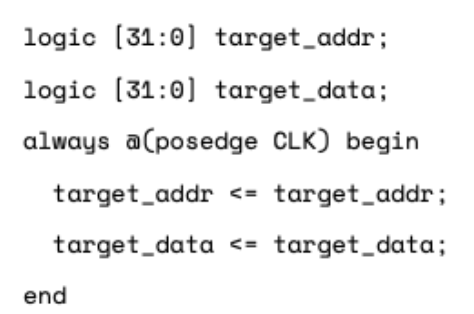

Here our elements are an address and a data. They are defined to be constant signals, with no defined values. They must be constant because used at different cycles:

The two assertions check requests for this oracle address in sequences and ensure the last read request gets the correct data:

Note that we simplified the assertions here, considering read_data is available exactly one cycle after the request, which is not true in our real formal testbench.

We quickly had failures. Easy! We need to model the external memory. This is done by connecting another instance of the CAM. Because the data now matters, we also needed to replace the black-boxed dataram arrays with CAM instances. Here, no extra constraint is needed: the initial content of the external memory and the dataram arrays can be anything.

Speeding up formal verification

This was enough to reproduce the data corruption bug found earlier by simulation. To investigate more around it, we decided to be less formal. The above assertions verify all addresses and all possible data. And the new assertions make the data signals also part of the formal exploration. That is huge and prevented us from getting full proofs, even after running for a couple of days on a large farm. So we needed to reduce this space.

Reducing the verification space at different levels

We did that at different levels with over-constraints and case-splitting.

- The most aggressive one was to drive the target_addr and target_data signals with fixed values. This allowed us to get proofs on the two assertions. We successfully checked with a few different values, but of course it is impossible to check all. Nevertheless, this greatly improved our confidence.

- We fixed only subranges of these signals. Depending on these ranges, we got exhaustive or only bounded proofs.

- We let the signals be fully symbolic, but checked only one bit of the data. Again, we got full proofs.

- We derived the two assertions above to more specific ones, specifying whether each request is cacheable or not, and whether it hits or misses in the cache. The testbench can predict whether each request will be a hit or a miss by comparing the request address with the content of the tagram CAM. This is very efficient while not reducing the exhaustivity of the verification because the union of all cases is the full space.

- Knowing the design and the formal testbench, it is useful to exploit the symmetry and to reduce the number of properties. For example, instead of verifying what happens in case of a hit, we verify what happens in case of a hit only in way, say, 2, and only in the first element of the tagram CAM. This is valid because we have added an abstraction on the eviction policy, which makes all ways equally reachable. And we can even verify that by placing cover properties for read or write in each way: they are covered with the same bound.

Choosing a formal-friendly configuration

Looking at waveforms, we identified that two features are huge “cycle consumers”, which is not good for FV: linefills and evictions trigger read and write burst requests to the external memory which spawns for many cycles. For example, a scenario showing two consecutive read requests which miss at the same address A is (in a write-back configuration):

- First read request at address A

- linefill: Read request at address A in external memory (burst of size N)

- eviction: Write request at address C (same index as A) in external memory (burst of size N)

- Read or write request at address B (same index as A)

- linefill: Read request at address B in external memory (burst of size N)

- eviction: Write request at address A in external memory (burst of size N)

- Second read request at address A

- linefill: Read request at address A in external memory (burst of size N)

You can see that a design configuration with a small N makes it easier for FV to consider this scenario.

This week’s good practices

We saw how we wrote very simple assertions targeting the verification of the main behavior of the cache. Because it introduces a lot of complexity, we had to use several tricks summarized below.

At this point we were almost done with the cache formal verification. We efficiently verified AHB protocol compliance, deadlocks, an essential assertion, and data integrity. We could have cooled down a bit, but no. I’ll tell you more in the next and final episode!